Today we’re announcing PromptApps: a way to package expert prompting into reliable, reusable mini-apps that anyone can run without needing to understand prompting, model selection, or AI mechanics.

PromptApps are intentionally narrow in scope. They are atomic, non-conversational, and non-agentic by design. That constraint is not a limitation. It is the feature that makes PromptApps dependable, easy to standardize, and easy to share as a building block in larger workflows.

A PromptApp is a governed prompt template with a defined input and output contract.

It encodes expert prompt construction, specifies the required inputs, and produces outputs in a predictable shape. It also enforces constraints and validations across two surfaces.

First, the prompt template variables. A PromptApp validates that inputs are present when required, conform to expected types and formats, and remain within bounded limits such as length, allowed ranges, enumerations, and domain-specific rules.

Second, the model configuration. A PromptApp constrains and validates the LLM model and the parameters that materially affect behavior. This includes the choice of model and the runtime settings that control variability, structure, and output stability.

The goal is to turn a prompt into an application artifact that can be reused safely, not a one-off interaction.

A PromptApp is not a chat assistant. It is non-conversational, does not rely on memory across turns, and does not negotiate its way into an answer. It is also not an agent. It does not call tools, query databases, or execute multi-step actions beyond the minimal mechanics of reading inputs and saving outputs.

This boundary keeps PromptApps simple, auditable, and easy to adopt.

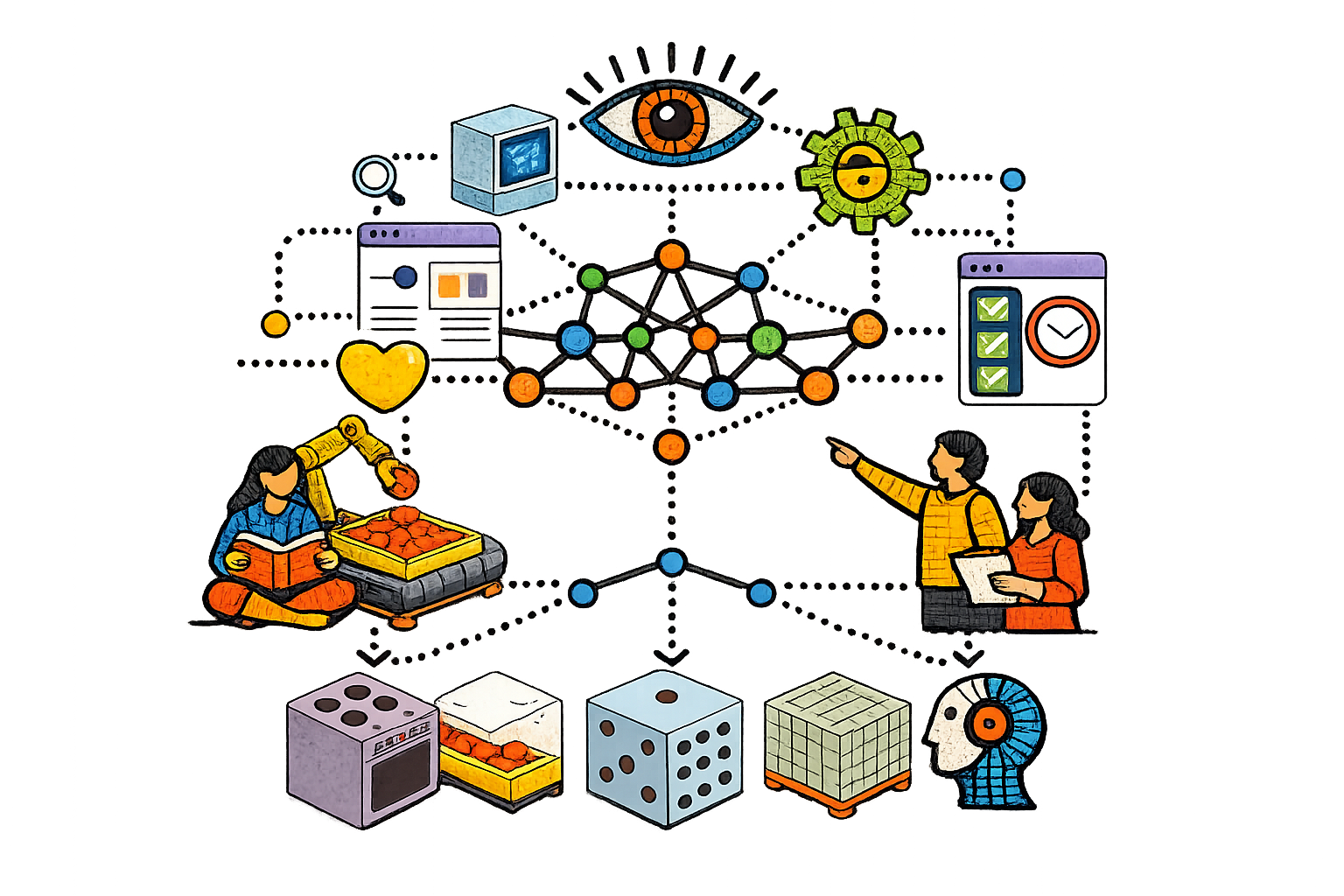

Because PromptApps are atomic and non-agentic, they compose naturally into linear pipelines.

A pipeline is a sequence of PromptApps where the output of one stage becomes the input to the next. The key difference from many agentic workflows is oversight: users can review, approve, and correct outputs at each stage rather than only inspecting the end result.

This design makes workflows easier to trust and easier to improve. Each stage is bounded, testable, and replaceable without destabilizing everything else.

While PromptApps fits well in enterprise and compliance-heavy environments, it is not limited to them. The same qualities that make PromptApps governable also make them broadly publishable.

A PromptApp can be created by an expert in a specific domain and shared the way you would share a template, a playbook, or a specialized internal tool. But unlike a generic template, a PromptApp carries its own constraints, validations, and output contract. It behaves consistently for the user who runs it, even if that user has no interest in learning prompting or AI.

PromptApps also unlock a simple idea: SMEs and domain specialists can publish PromptApps as monetized expert mini-apps.

Instead of selling time, an SME can package repeatable expertise into a PromptApp that generates subject-relevant outputs for users. The user’s experience stays straightforward: provide the required inputs, receive a structured output that matches a known contract.

This opens the door to a marketplace of PromptApps where:

In other words, PromptApps can be published and consumed as applications, not as prompts and not as chats.

PromptApps is built on a simple belief: the scalable path for AI is to turn expert prompting into reusable application artifacts with clear contracts, constraints, and predictable outputs.

Those artifacts can power overseen pipelines inside teams, and they can also become monetized expert mini-apps published by SMEs across verticals.

PromptApps is not a chatbot. It is not an agent. It is a new unit of software for LLM-powered work: atomic by design, composable by default, and shareable beyond the boundaries of models, parameters, and interfaces.